Deploying Microsoft Defender Application Guard for Office

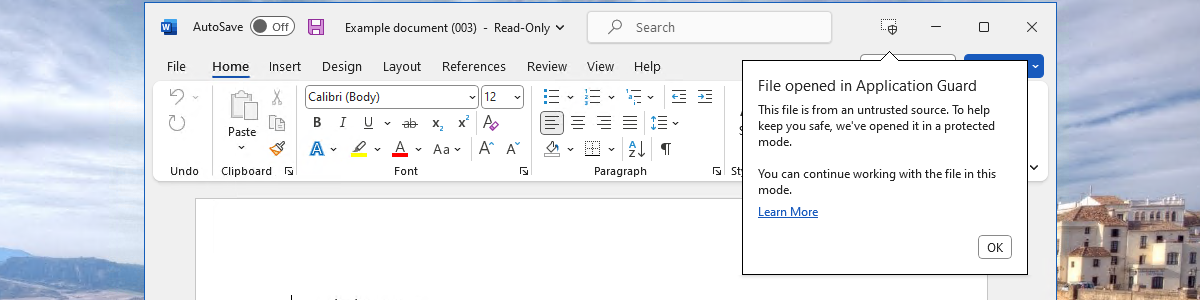

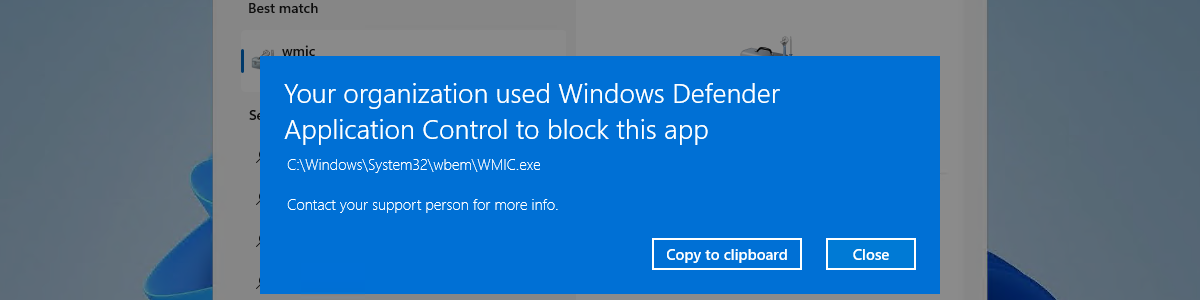

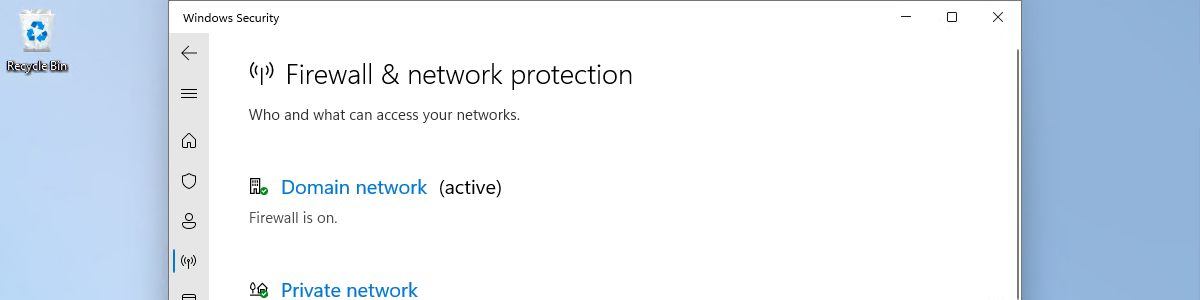

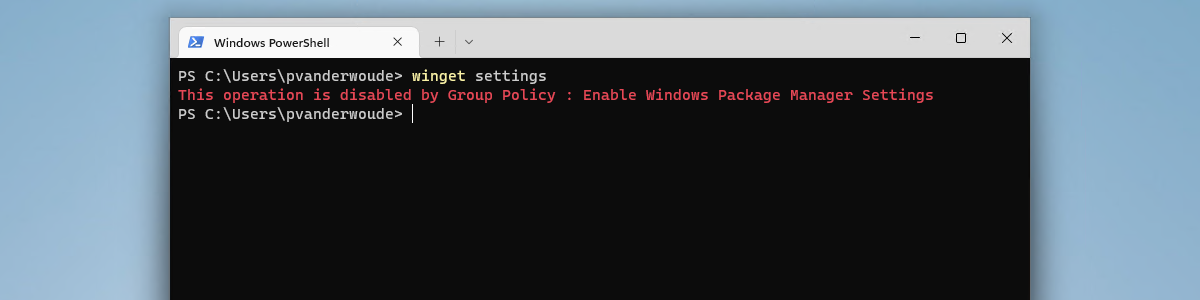

This week is all about Microsoft Defender Application Guard (Application Guard) for Office. It’s a follow up on this post of almost 2 years ago. That time the focus was simply on getting started with Application Guard and it slightly missed out on Application Guard for Office. This time Application Guard for Office will be the main focus. Application Guard for Office uses hardware isolation to isolate untrusted Office files, by running the Office application in an isolated Hyper-V container. That isolation makes sure that anything potentially harmful in those untrusted Office files, happens within that isolated Hyper-V container and is isolated from the host operating system. That isolation provides a nice, but resource intensive, additional security layer. This post will start with a quick …